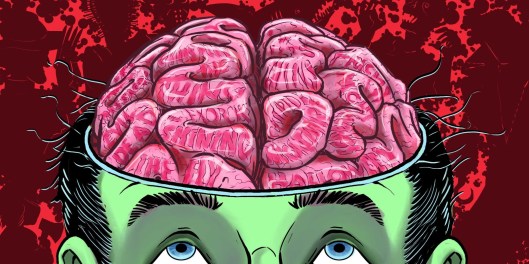

Research suggests that as the brain grows dependent on phone technology, the intellect weakens.

The smartphone is unique in the annals of personal technology. We keep the gadget within reach more or less around the clock, and we use it in countless ways, consulting its apps and checking its messages and heeding its alerts scores of times a day. The smartphone has become a repository of the self, recording and dispensing the words, sounds and images that define what we think, what we experience and who we are. In a 2015 Gallup survey, more than half of iPhone owners said that they couldn’t imagine life without the device.

We love our phones for good reasons. It’s hard to imagine another product that has provided so many useful functions in such a handy form. But while our phones offer convenience and diversion, they also breed anxiety. Their extraordinary usefulness gives them an unprecedented hold on our attention and vast influence over our thinking and behavior. So what happens to our minds when we allow a single tool such dominion over our perception and cognition?

Scientists have begun exploring that question—and what they’re discovering is both fascinating and troubling. Not only do our phones shape our thoughts in deep and complicated ways, but the effects persist even when we aren’t using the devices. As the brain grows dependent on the technology, the research suggests, the intellect weakens.

‘The division of attention impedes reasoning and performance.’

Adrian Ward, a cognitive psychologist and marketing professor at the University of Texas at Austin, has been studying the way smartphones and the internet affect our thoughts and judgments for a decade. In his own work, as well as that of others, he has seen mounting evidence that using a smartphone, or even hearing one ring or vibrate, produces a welter of distractions that makes it harder to concentrate on a difficult problem or job. The division of attention impedes reasoning and performance.

A 2015 Journal of Experimental Psychology study, involving 166 subjects, found that when people’s phones beep or buzz while they’re in the middle of a challenging task, their focus wavers, and their work gets sloppier—whether they check the phone or not. Another 2015 study, which involved 41 iPhone users and appeared in the Journal of Computer-Mediated Communication, showed that when people hear their phone ring but are unable to answer it, their blood pressure spikes, their pulse quickens, and their problem-solving skills decline.

The earlier research didn’t explain whether and how smartphones differ from the many other sources of distraction that crowd our lives. Dr. Ward suspected that our attachment to our phones has grown so intense that their mere presence might diminish our intelligence. Two years ago, he and three colleagues— Kristen Duke and Ayelet Gneezy from the University of California, San Diego, and Disney Research behavioral scientist Maarten Bos —began an ingenious experiment to test his hunch.

The researchers recruited 520 undergraduate students at UCSD and gave them two standard tests of intellectual acuity. One test gauged “available cognitive capacity,” a measure of how fully a person’s mind can focus on a particular task. The second assessed “fluid intelligence,” a person’s ability to interpret and solve an unfamiliar problem. The only variable in the experiment was the location of the subjects’ smartphones. Some of the students were asked to place their phones in front of them on their desks; others were told to stow their phones in their pockets or handbags; still others were required to leave their phones in a different room.

‘As the phone’s proximity increased, brainpower decreased.’

The results were striking. In both tests, the subjects whose phones were in view posted the worst scores, while those who left their phones in a different room did the best. The students who kept their phones in their pockets or bags came out in the middle. As the phone’s proximity increased, brainpower decreased.

In subsequent interviews, nearly all the participants said that their phones hadn’t been a distraction—that they hadn’t even thought about the devices during the experiment. They remained oblivious even as the phones disrupted their focus and thinking.

A second experiment conducted by the researchers produced similar results, while also revealing that the more heavily students relied on their phones in their everyday lives, the greater the cognitive penalty they suffered.

In an April article in the Journal of the Association for Consumer Research, Dr. Ward and his colleagues wrote that the “integration of smartphones into daily life” appears to cause a “brain drain” that can diminish such vital mental skills as “learning, logical reasoning, abstract thought, problem solving, and creativity.” Smartphones have become so entangled with our existence that, even when we’re not peering or pawing at them, they tug at our attention, diverting precious cognitive resources. Just suppressing the desire to check our phone, which we do routinely and subconsciously throughout the day, can debilitate our thinking. The fact that most of us now habitually keep our phones “nearby and in sight,” the researchers noted, only magnifies the mental toll.

Dr. Ward’s findings are consistent with other recently published research. In a similar but smaller 2014 study (involving 47 subjects) in the journal Social Psychology, psychologists at the University of Southern Maine found that people who had their phones in view, albeit turned off, during two demanding tests of attention and cognition made significantly more errors than did a control group whose phones remained out of sight. (The two groups performed about the same on a set of easier tests.)

In another study, published in Applied Cognitive Psychology in April, researchers examined how smartphones affected learning in a lecture class with 160 students at the University of Arkansas at Monticello. They found that students who didn’t bring their phones to the classroom scored a full letter-grade higher on a test of the material presented than those who brought their phones. It didn’t matter whether the students who had their phones used them or not: All of them scored equally poorly. A study of 91 secondary schools in the U.K., published last year in the journal Labour Economics, found that when schools ban smartphones, students’ examination scores go up substantially, with the weakest students benefiting the most.

It isn’t just our reasoning that takes a hit when phones are around. Social skills and relationships seem to suffer as well. Because smartphones serve as constant reminders of all the friends we could be chatting with electronically, they pull at our minds when we’re talking with people in person, leaving our conversations shallower and less satisfying.

In a study conducted at the University of Essex in the U.K., 142 participants were divided into pairs and asked to converse in private for 10 minutes. Half talked with a phone in the room, while half had no phone present. The subjects were then given tests of affinity, trust and empathy. “The mere presence of mobile phones,” the researchers reported in 2013 in the Journal of Social and Personal Relationships, “inhibited the development of interpersonal closeness and trust” and diminished “the extent to which individuals felt empathy and understanding from their partners.” The downsides were strongest when “a personally meaningful topic” was being discussed. The experiment’s results were validated in a subsequent study by Virginia Tech researchers, published in 2016 in the journal Environment and Behavior.

The evidence that our phones can get inside our heads so forcefully is unsettling. It suggests that our thoughts and feelings, far from being sequestered in our skulls, can be skewed by external forces we’re not even aware of.

Scientists have long known that the brain is a monitoring system as well as a thinking system. Its attention is drawn toward any object that is new, intriguing or otherwise striking—that has, in the psychological jargon, “salience.” Media and communications devices, from telephones to TV sets, have always tapped into this instinct. Whether turned on or switched off, they promise an unending supply of information and experiences. By design, they grab and hold our attention in ways natural objects never could.

But even in the history of captivating media, the smartphone stands out. It is an attention magnet unlike any our minds have had to grapple with before. Because the phone is packed with so many forms of information and so many useful and entertaining functions, it acts as what Dr. Ward calls a “supernormal stimulus,” one that can “hijack” attention whenever it is part of our surroundings—which it always is. Imagine combining a mailbox, a newspaper, a TV, a radio, a photo album, a public library and a boisterous party attended by everyone you know, and then compressing them all into a single, small, radiant object. That is what a smartphone represents to us. No wonder we can’t take our minds off it.

The irony of the smartphone is that the qualities we find most appealing—its constant connection to the net, its multiplicity of apps, its responsiveness, its portability—are the very ones that give it such sway over our minds. Phone makers like Apple and Samsungand app writers like Facebook and Google design their products to consume as much of our attention as possible during every one of our waking hours, and we thank them by buying millions of the gadgets and downloading billions of the apps every year.

A quarter-century ago, when we first started going online, we took it on faith that the web would make us smarter: More information would breed sharper thinking. We now know it isn’t that simple. The way a media device is designed and used exerts at least as much influence over our minds as does the information that the device unlocks.

‘People’s knowledge may dwindle as gadgets grant them easier access to online data.’

As strange as it might seem, people’s knowledge and understanding may actually dwindle as gadgets grant them easier access to online data stores. In a seminal 2011 study published in Science, a team of researchers—led by the Columbia University psychologist Betsy Sparrow and including the late Harvard memory expert Daniel Wegner —had a group of volunteers read 40 brief, factual statements (such as “The space shuttle Columbia disintegrated during re-entry over Texas in Feb. 2003”) and then type the statements into a computer. Half the people were told that the machine would save what they typed; half were told that the statements would be immediately erased.

Afterward, the researchers asked the subjects to write down as many of the statements as they could remember. Those who believed that the facts had been recorded in the computer demonstrated much weaker recall than those who assumed the facts wouldn’t be stored. Anticipating that information would be readily available in digital form seemed to reduce the mental effort that people made to remember it. The researchers dubbed this phenomenon the “Google effect” and noted its broad implications: “Because search engines are continually available to us, we may often be in a state of not feeling we need to encode the information internally. When we need it, we will look it up.”

Now that our phones have made it so easy to gather information online, our brains are likely offloading even more of the work of remembering to technology. If the only thing at stake were memories of trivial facts, that might not matter. But, as the pioneering psychologist and philosopher William James said in an 1892 lecture, “the art of remembering is the art of thinking.” Only by encoding information in our biological memory can we weave the rich intellectual associations that form the essence of personal knowledge and give rise to critical and conceptual thinking. No matter how much information swirls around us, the less well-stocked our memory, the less we have to think with.

‘We aren’t very good at distinguishing the knowledge we keep in our heads from the information we find on our phones.’

This story has a twist. It turns out that we aren’t very good at distinguishing the knowledge we keep in our heads from the information we find on our phones or computers. As Dr. Wegner and Dr. Ward explained in a 2013 Scientific American article, when people call up information through their devices, they often end up suffering from delusions of intelligence. They feel as though “their own mental capacities” had generated the information, not their devices. “The advent of the ‘information age’ seems to have created a generation of people who feel they know more than ever before,” the scholars concluded, even though “they may know ever less about the world around them.”

That insight sheds light on our society’s current gullibility crisis, in which people are all too quick to credit lies and half-truths spread through social media by Russian agents and other bad actors. If your phone has sapped your powers of discernment, you’ll believe anything it tells you.

Data, the novelist and critic Cynthia Ozick once wrote, is “memory without history.” Her observation points to the problem with allowing smartphones to commandeer our brains. When we constrict our capacity for reasoning and recall or transfer those skills to a gadget, we sacrifice our ability to turn information into knowledge. We get the data but lose the meaning. Upgrading our gadgets won’t solve the problem. We need to give our minds more room to think. And that means putting some distance between ourselves and our phones.

Mr. Carr is the author of “The Shallows” and “Utopia Is Creepy,” among other books.

Jennifer Delgado Suárez

Jennifer Delgado Suárez